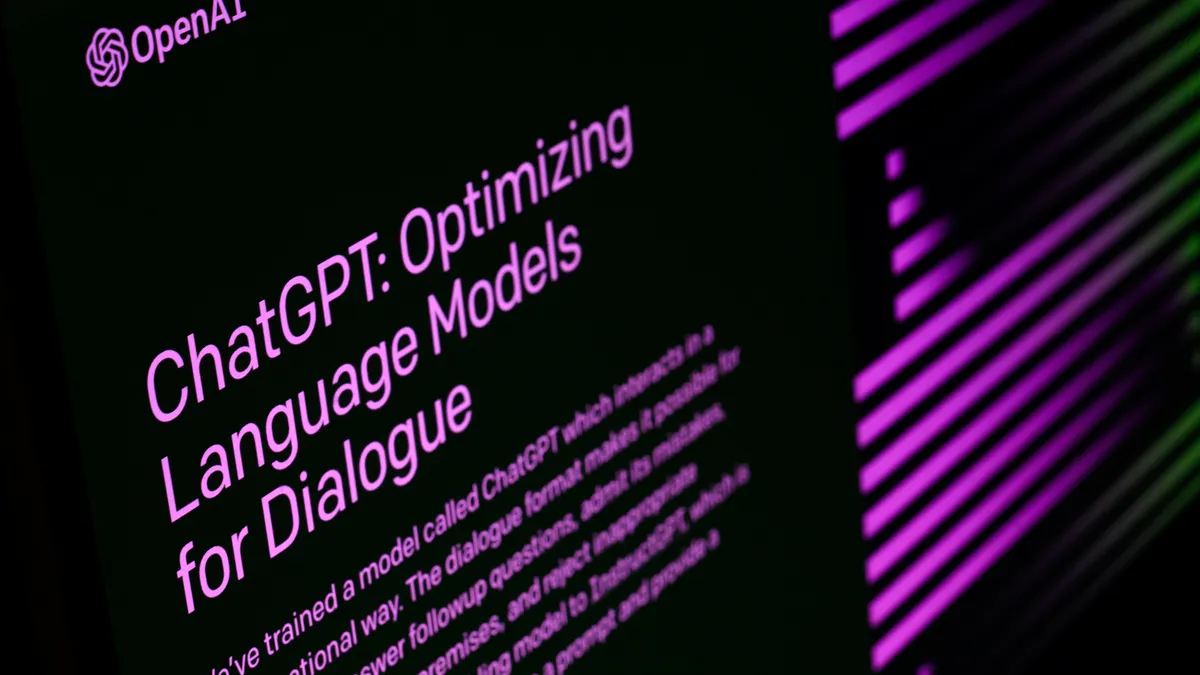

ChatGPT, one of the most well-known artificial intelligence chatbots, can now produce college-level pieces of writing with a few good prompts. Its essays have even passed tests at prestigious business and law schools.

The program's meteoric rise in the months since it launched has forced higher education to grapple with concerns over academic integrity and AI's use in the classroom.

But the conversations around cheating with generative AI aren't new, even if the technology is, according to Sarah Cabral, a senior scholar of business ethics at Santa Clara University's Markkula Center for Applied Ethics.

In a recent conversation, she shared with Higher Ed Dive how ChatGPT took educators by surprise and why incorporating character education into curriculum is as important as teaching biology or world history.

This interview has been edited for clarity and length.

HIGHER ED DIVE: The popularity of generative artificial intelligence has skyrocketed since ChatGPT launched in November. Were faculty prepared?

SARAH CABRAL: I don't know that most educators were really having conversations about this until ChatGPT was a reality, and by then, students were already using it.

I was personally surprised by the capabilities of AI when students started referencing what ChatGPT was able to do. And I don't know why that's the case because in some ways, it's unsurprising. It seems like the logical next iteration of AI. But personally, I was still caught off guard.

A majority of colleges haven't issued edicts on ChatGPT and if or how it should be used in academic settings. How have you seen individual teachers react to the technology?

Many educators I've talked to are going back to the basics and having students handwrite in-class assessments to avoid the temptation altogether. It can be inconvenient, but there are relatively simple ways to get around it until we figure out the best way to utilize ChatGPT to the students' and teachers' advantage.

For a longer-term approach, others are considering how we could have students create a first draft with ChatGPT and then submit that along with a final draft to showcase how they were able to build upon the initial work of the bot.

I don't know that I have a single best practice to hold up. But the perspective of my colleagues is that it doesn't make sense to ban ChatGPT.

There are still consequences for students who secretly use ChatGPT, of course. I know of one colleague who read an essay that didn't seem to be in the voice of the student. When that student was pressed, she admitted that she had used ChatGPT to create the essay and there were consequences for that. So, while it's not being banned yet, students can't falsely claim that this is their work and submit it as such.

In the absence of a ban on programs like ChatGPT, what can colleges do to ensure students use them properly?

This is a great question. What fascinates me is what might cause a student to not use ChatGPT even if they can get away with it. Because there aren't a lot of faculty that would be able to catch it. The one student I mentioned who was caught, it's only because she eventually self-disclosed. It wasn't like the teacher would have been able to fully prove this hunch.

This really highlights the need to have conversations with students about character. Like, who you are as a person and how do you feel about being someone who is dishonest? Because if you practice dishonesty enough, that's who you become.

I want to know if that is enough of a disincentive, outside of any policy, for students to avoid using ChatGPT.

How can faculty, and colleges more broadly, incorporate ethics and character education into the AI discussion?

I'm obviously a proponent of ethics education, but it doesn't have to be limited to a separate ethics class. There can be training for faculty around how to have conversations that involve values and ethics within any subject — English, history, science. I don't think you need a PhD in philosophy to do that.

There's so much history that would support character education in schools, but I don't think that it's as commonly practiced today and that we've moved away from having those conversations.

My stance is in line with David Foster Wallace's famous commencement speech at Kenyon College. He said the goal of an education isn't the attainment of knowledge. It's to become aware of the fact that we have the choice to decide what to think about.

The higher education sector offers a lot of short-term credentials and bootcamps, especially in the technology field. Can these programs offer a holistic approach like the one you're describing?

Programs that are abbreviated or have students really specialize in particular areas are going to have to cut things out of the curriculum. That shows what the school views as important and what it thinks will add value to the students' future.

We get to decide what has meaning and what doesn't. And I think that it's really limited when schools offer these specialized programs or certificate programs. If that's in addition to a more robust liberal arts education, that's fantastic. But if that's a substitute for liberal arts education, I think that loses something.